Raytracing, But for sound

When you speak in a cathedral, your voice is not just travelling directly to someone else’s ears; it’s reflecting off domed ceilings, scatters across stone walls, and diffracts around columns. All these acoustic interactions shape what we recognize as reverberation, the persistence of sound in a space after the original sound is produced.

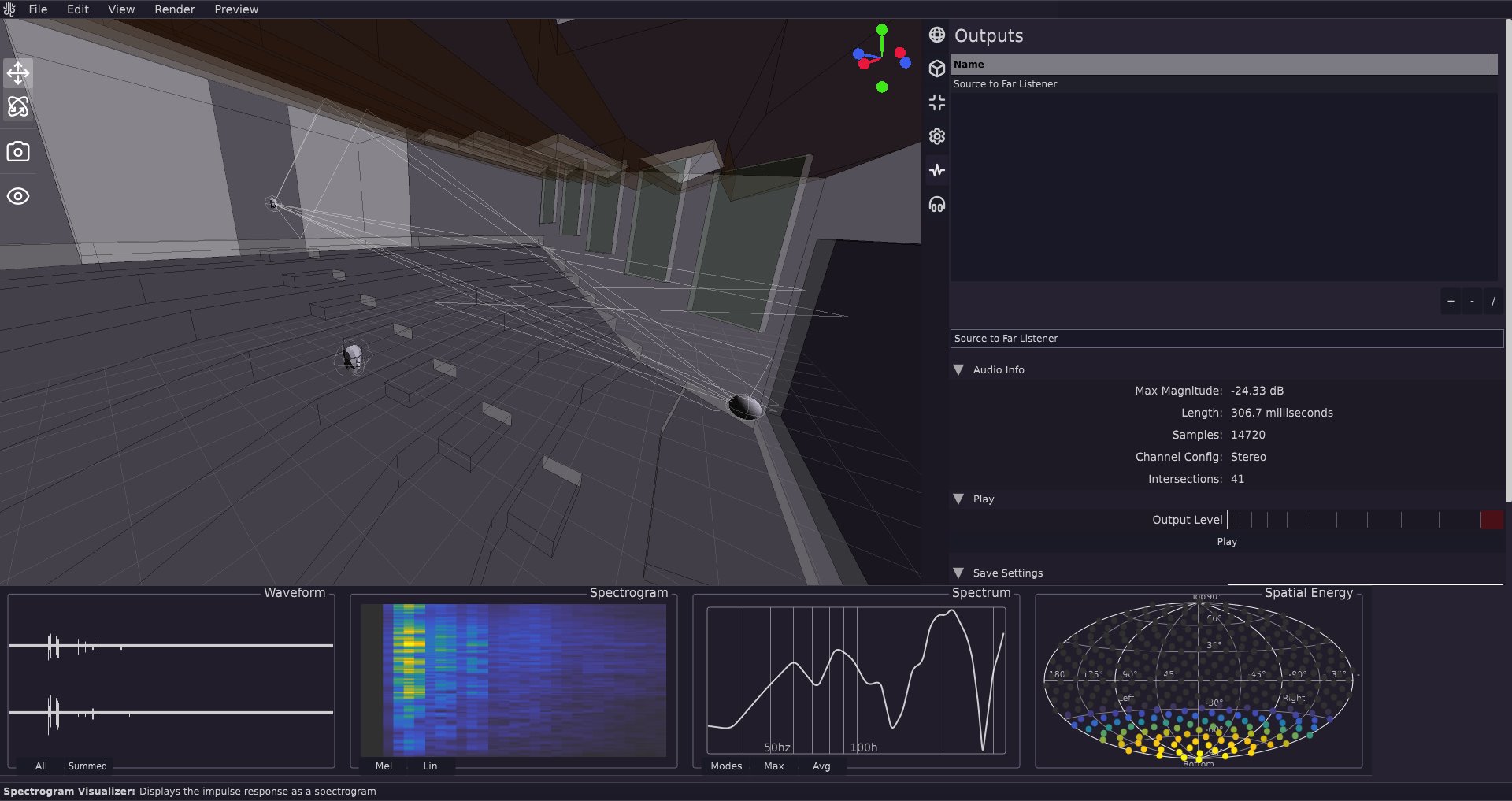

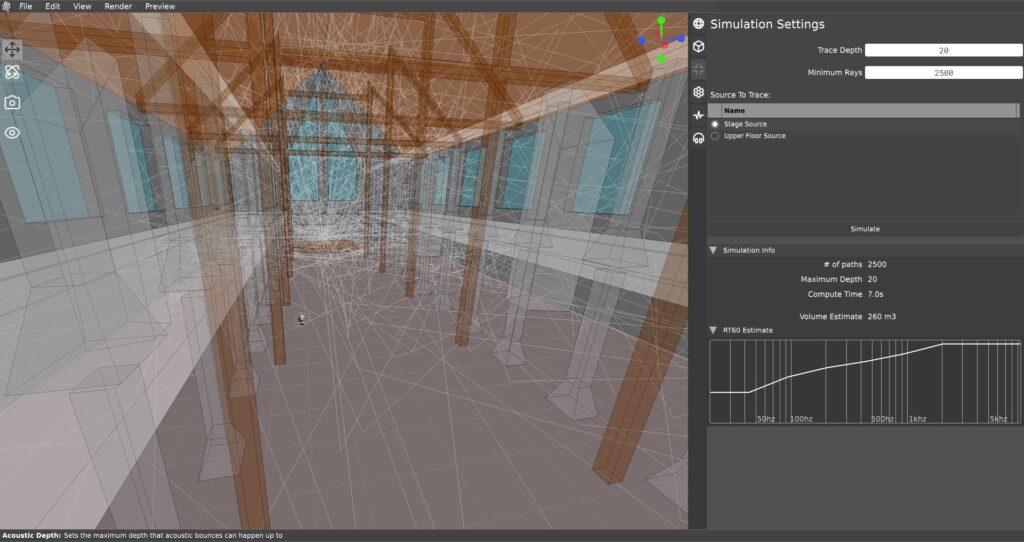

Capturing this complex behavior in digital audio requires more than simple delay lines or filters. That’s where our software Impulse comes in.

What Is Audio Ray Tracing?

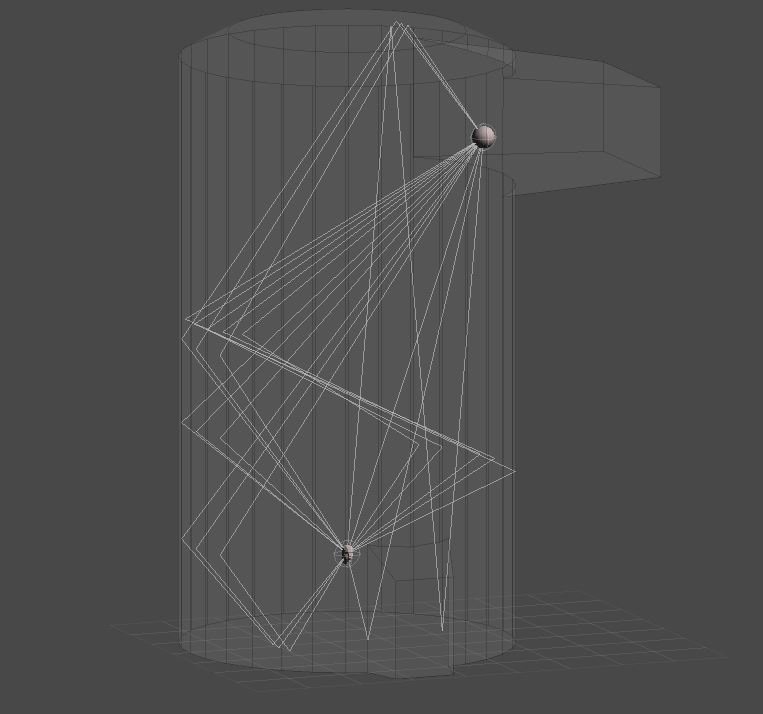

Borrowing its name from the world of computer graphics (because algorithmically, it’s similar), ray tracing in acoustics is a method of modeling how sound propagates through an environment by treating sound as rays. These rays are cast from a sound source and travel through space, bouncing off surfaces, being absorbed or scattered, and eventually reaching a listener (or a microphone, in simulation).

While sound is actually a wave and not a ray, in many large-scale or high-frequency environments, the ray approximation is quite useful. This technique is especially helpful in room acoustics, architectural design, game audio, and virtual reality systems that require realistic spatial audio.

How It Works

Here’s a basic outline of how audio ray tracing operates:

Sound Ray Emission

Rays are emitted in many directions from a source, simulating spherical propagation of sound. Each ray represents a potential path the sound could take.Surface Interactions

When a ray hits a surface:Some of its energy is reflected, based on the surface’s reflectivity and absorption coefficients.

The angle of reflection is determined by the angle of incidence (like in optics).

Optionally, diffusion and scattering can be applied to simulate rough surfaces easier than having modelled roughness in the geometry itself.

Pathfinding to Listener

Rays that reach the listener position—either directly or after one or more reflections—are collected as valid propagation paths. These paths are used to compute the delay, attenuation, and spectral coloration of the sound that arrives.Impulse Response Generation

The collected ray paths are rendered into an impulse response (IR). This IR represents how an impulse (like a clap) would sound in that environment, capturing the direct sound, early reflections, and late reverb tails. Additional processing can also be applied.

Doing all of this in real-time is unfeasible in most cases; In cases where realism is needed, upwards of hundreds of thousands of rays, with reflections computed 100 bounces deep are necessary. Impulse utilizes a multi-core process when doing these computations, allowing for faster rendering.

Ray Tracing vs. Traditional Reverb Algorithms

Traditional algorithmic reverbs (AKA pretty much every non-convolution reverb) use systems of delay networks, all-pass filters, and feedback to simulate the statistical properties of reverberation. They are efficient, but they do not model a specific space; just a general, room-like sound.

Ray tracing, in contrast:

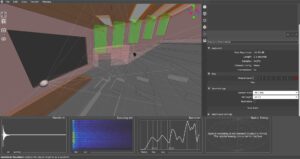

Models physical spaces based on geometry and material properties.

Produces a spatially accurate reverb with real-world reflections.

Can simulate directionality, occlusion, and environmental dynamics (e.g., moving walls or opening doors).

Use Cases

Virtual Reality (VR) & AR: For realism, users expect sound to respond to their movement and the environment’s shape.

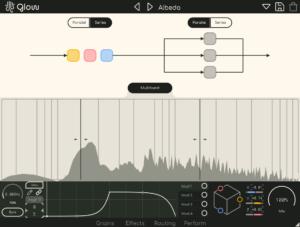

Game Audio Engines: Sound Middleware like Audiokinetic’s Wwise use ray tracing to simulate occlusion & early reflections dynamically. Wwise also allows for custom generated IRs, like ones created in Impulse, to be easily imported.

Architectural Acoustics: Designers simulate how spaces will sound before construction begins.

Film and Media Production: Used to place sounds convincingly into CG environments.

Conclusion

Ray tracing in audio isn’t a tech gimmick or buzzword. It brings spatial realism to sound design by modeling how sound actually moves through the world. When applied to reverb simulation, it allows us to move beyond artificial room tones and into the realm of physically accurate soundscapes, whether you’re designing a concert hall or immersing players in a digital universe.

Our software Impulse is designed specifically to do these things. Along with Eigen (essentially Impulse in a smaller Audio Plugin format for the casual musician), our Impulse Response rendering engine uses a hybrid algorithm approach to deliver the best quality at a variety of different involvement levels.